Data from our 2026 State of People Strategy Report showed that while 39% of HR teams said they’re getting some or significant pressure from business leaders to increase efficiency with AI, 61% have ethical concerns about doing so.

This tipping point between business demands and ethical responsibility is creating a new challenge for HR leaders in the age of AI.

Navigating through this tension relies on understanding which risks are genuine and focusing on the measures you can control: protecting data privacy, maintaining employee trust, and building AI systems that enhance human judgment, not replace it.

AI Technology: The Concerns, Risks, and Considerations

HR leaders are balancing two oppositional challenges when it comes to integrating AI into people strategy: the immediate, operational risks tied to data privacy, compliance, and readiness — and the longer-term questions about the potential artificial intelligence has in reshaping fairness, transparency, and the future role of humans in HR.

The hardest part is knowing what’s fact and what’s pure science fiction.

3 AI Risks HR Leaders Need to Address Right Now

1. Keeping Employee Data Safe When Using AI

In a world where a data breach can land an organization in a multimillion-dollar class action lawsuit, data privacy is a critical priority for every HR leader. But often, the perceived threat is less about malicious actors and cyberattacks and more about organizations’ workflows for data processing and handling from within.

Your employees’ information is among some of the most sensitive data your organization holds — covering everything from salary and personal data to diversity, equity, inclusion, and belonging (DEIB) information that could put employees at direct risk if misused.

Inputting employee data into an AI system, for example, exposes a fundamental tension for HR leaders: They want to use their data to improve the employee experience while protecting employee privacy. One can’t come at the cost of the other — it’s crucial for HR to be thinking about data security every step of the way.

2. Feeling Uncertain About Organizational (and HR) Readiness

HR leaders know that, done well, AI can help them improve team and organizational efficiency and reduce the manual work that bloats HR’s calendar.

But many are waiting for exactly the right set of conditions before getting started — such as budget, training, and infrastructure. This, said Theresa Fesinstine, AI educator and founder of peoplepower.ai, is where analysis can often become paralysis.

“A lot of HR teams overestimate how hard it is to get started,” she said. “They think it has to be this massive transformation with a big tech stack, an AI task force, legal reviews from day one. But I’ve seen teams start small and make real progress by just exploring the tools and experimenting with the work they already do. It’s not as high-stakes as people think.”

This hesitation fuels the myth that AI implementation is only a privilege afforded to the companies that can bankroll it. But in reality, AI is embedded into the tools many organizations use each day. The real barrier isn’t necessarily scale — it’s confidence.

“AI is just another tool in the toolbox — but it doesn’t help us to mystify it this much,” said Linnea Bywall, VP of people at Quinyx. “We need to frame it more like, ‘It’s Tuesday. We just need to get to work.’ We have to eat the elephant one bite at a time.”

3. Building Compliance and Ethical Safeguards

Data from a 2024 McKinsey survey showed that most organizations are still in the early stages of understanding their AI ethics and compliance needs: Only 13% of respondents said their companies have hired AI compliance specialists, while 6% have hired an AI ethics specialist.

This means that most HR leaders are navigating AI safety issues without expert guidance on how AI intersects with employment law, the General Data Protection Regulation (GDPR), Equal Employment Opportunity Commission regulations, and internal policies, leaving them open to penalties, reputational damage, and loss of trust.

But here’s the paradox: If HR leaders spend too long on compliance frameworks, the organization may end up finding workarounds — leading to high-risk, unsanctioned AI use.

“If we don’t do it with that ethical mindset that is embedded in the HR profession, then someone else will do it without that background,” Bywall said. “We need more gas on the pedal rather than having the brakes on.”

{{rich-highlight-2}}

2 Long-Term Considerations for the Future

1. Protecting the Human Side of HR

Few HR leaders would say they got into the job because they wanted to be less involved with the people in their company. Yet as AI continues to spread to every corner of the organization, the existential risk of replacing human intelligence with robots is starting to feel very real.

This, in turn, is fueling a belief that AI is coming for HR’s job.

The good news is HR leaders are still feeling optimistic about AI’s potential in freeing them up for more people-led, strategic work. Our 2026 State of People Strategy data shows that while 33% of HR professionals feel less confident about their career security due to their reliance on AI, 78% are somewhat or very excited about AI’s role in HR.

2. Ensuring Fairness and Transparency

When Isaac Asimov wrote I, Robot in 1950, he tapped into a very real fear of the unknown — one that persists today. To many of us, AI still feels like a black box — a mysterious machine capable of spitting out information without much guidance or oversight.

And for HR leaders, this opacity introduces serious risks related to the implementation of AI-powered tooling in decision-making processes — particularly when those outputs feed into promotions, performance, or pay.

According to data from a 2024 McKinsey survey on AI in the workplace, 71% of employees trust that their employers will act ethically as they roll out AI. But the challenge for HR becomes maintaining that trust — and being able to show, not tell, how any AI-powered tooling reaches its conclusions.

“In an AI context, explainability means you can clearly understand and validate how a system arrived at a specific output,” said Fesinstine. “Not just what the tool does broadly, but how a particular decision, like a flagged performance trend or a skills match, was generated, step by step. That means there’s a traceable logic behind the result, and it can be reviewed, questioned, and explained by a human. You need to be able to walk through the why — especially when decisions affect employees.

“The teams I see approaching this well are identifying tools that offer visibility into how outputs are generated, and building in checkpoints where humans can review and adjust before anything moves forward.”

{{rich-highlight-3}}

How to Responsibly Adopt AI in HR

Knowing the risks and potential impact of AI on your organization is one thing — but translating that knowledge into practical action is another.

To scale long-term AI-HR integration, HR leaders need to balance their quest for innovation with governance, and get safeguards in place early. This hinges on treating adoption as a continuous cycle of auditing your processes and priorities, aligning with cross-functional partners, and experimenting with new approaches.

1. Audit HR processes from end to end.

Integrating AI successfully into HR processes rarely starts with the tech itself — it starts with your day-to-day work. Mapping out your processes and the workflows within those processes can help expose gaps and opportunities for AI integration.

“Start by walking your team through a normal week or month. What’s taking up time that doesn’t need to? Where do things bottleneck?” Fesinstine advised. “From there, you can score or categorize potential use cases based on how sensitive the data is, how often the task happens, and how much human oversight you’d still want in the loop.

“Don’t forget to consider the emotional weight of a task, too,” she added. “If something feels high stakes to employees, like performance feedback or internal communications, it’s worth adding extra guardrails or pausing altogether.”

Once you’ve mapped out your processes, you can take a leaf out of the product team’s book and identify the specific outcomes you’re trying to achieve.

Bywall likes to view this process through two complementary lenses: where HR drives impact, and where it can create value for the business.

“I really like this thinking of: what are you really good at, when do you create the most value, what is taking time away from that, and how can you automate those things?” she said. “Know your why for the business — whether that’s driving efficiency, happier employees, or better customer health. Then, find the tool that can get you there.”

To get started, ask:

- Which processes are a priority to fix, and how might AI help?

- Who are our core users for these processes, and how might the integration of AI impact them?

- Where might AI be a nice-to-have, not a priority?

- What does successful integration look like, and how will we measure it?

2. Get internal team members involved from day one.

Once you’ve defined your top uses of AI in your people processes, it’s time to start sense-checking your approach with cross-functional partners.

That AI resume screening tool might look like it solves your recruiting woes, but if the legal team discovers that it auto-rejects candidates without human oversight, it’s a downward slide to a discrimination lawsuit.

Looping in key stakeholders from departments including legal, IT, finance, and operations right from the very beginning will help you spot these risks early, and avoid a compliance quagmire or lengthy backtracking later on.

Share your planned approach with your broader team, and ask:

- Are there any concerns or red flags you see with these use cases?

- Are there any legal, ethical, operational, or compliance requirements to consider before we go to market?

- What are the risks of this approach not working?

- What conditions would make this a dealbreaker?

- Are there any safeguards we need to implement?

3. Choose your vendors wisely.

When the time comes to actually go out to market for some AI tooling, you’ll need to vet any vendor just as thoroughly as you would your potential use cases.

“Having a good supplier vetting process will help you make sure that you’re working with vendors that are in line with the AI Act, are GDPR-friendly, and meet privacy policy requirements,” Bywall said. “When talking to vendors, I focus on security first: I look for where they store data, how they use data, and I think about exactly what kind of data I’ll be putting in.”

Red flags are just as important as green flags, Bywall said. “If they can’t answer how they trained their model, how they have a human in the loop, how they store data, or what their privacy policy is, that’s a red flag. Serious vendors are on top of those things.”

Questions HR leaders can ask to get a solid grip on vendor integrity include:

- Does your AI model train on customer data? Is it by default, or can we opt out?

- Where is your data stored and processed?

- How do you test your product for bias?

- Can you share details on any security and privacy certifications you currently hold?

- Where in your product/model is there a human in the loop?

- Can you explain how your model/tool reaches its outcomes?

- Does your tool support access-based controls, so that we can assign different levels of access to different users?

- How quickly will our data be wiped from your system if we cancel our contract?

4. Define clear lines of ownership.

Securing the right vendor is critical to successful adoption. But to scale long-term, clear ownership and knowing who governs your AI policies and strategy are just as important.

Much like evaluating tooling and features, this should be a cross-functional effort that plays to your broader team’s strengths — but it should be led by HR.

“[Ownership] starts with HR stepping up early and naming AI as part of the people infrastructure — even if they don’t feel 100% confident,” said Fesinstine. “This isn’t just a tech decision — it touches how work gets done, how decisions are made, and how teams function. If HR isn’t helping shape that, someone else will.

“Ownership doesn’t mean HR has to do everything,” she added. “What works best is a shared model: HR owns the use case, the workflow, and the employee experience lens. Legal helps with compliance and risk. IT supports the tech and access. DEIB keeps an eye on how this impacts equity. Everyone has a role to play, and HR’s job is to make sure those roles are clear and coordinated.”

To define these roles, ask:

- Which decisions should HR lead on, and where do we need expertise from internal stakeholders?

- Who will be responsible for reviewing and updating our policies and strategy as our usage of AI evolves?

- Who oversees our relationships and contracts with vendors?

- How often will we collectively review current policies and tooling?

5. Experiment thoughtfully to build knowledge — and don’t wait for “perfect.”

Putting AI to work in your HR processes doesn’t start with rolling it out at scale right away. Instead, it starts with small, safe projects that give HR teams a sandbox to play in and figure out what works, as much as what doesn’t.

But don’t let perfect be the enemy of good when getting started.

“HR is still waiting to be told what to do,” said Fesinstine. “One thing I’ve seen is teams get stuck trying to find ‘perfect’ use cases. You don’t need perfect — you need something you can pilot, observe, and learn from.”

To balance the potential risks and rewards, Fesinstine recommends lowering the stakes with smaller projects and pilots so that teams can try out the tools firsthand.

“If you’re in HR and talking about training, learning, or capability building, you need to apply that same mindset to yourself. Get curious. Spend time inside the tools. Take 30 minutes and try using one to do something you normally do by hand.

“Begin with your own team. Look where people are stuck or stretched thin. Where are the time drains? What’s repetitive? What’s slowing progress? Use those insights to build a small Human plus AI workflow that softens the friction. Don’t try to scale right away — just solve one pain point, reflect on it, and then move to the next.”

Try these practical tips to get started:

- Use generative AI to create custom AI training material that helps the HR team learn the basics and build progressive learning journeys.

- Plug training data or dummy datasets into tools to see how the data is used and processed.

- Run employee pulse surveys alongside pilots to gather feedback on employee sentiment.

- Document all pilots and tests, running a retrospective on what worked, what didn’t, and what you’d change.

{{rich-highlight-1}}

People-First, AI-Powered With Lattice

The path to effective and responsible AI adoption in HR isn’t about perfection, fear, or hugging the rule book close — it’s about equipping teams with the confidence to experiment while keeping ethics and trust front and center.

The organizations getting this right aren’t necessarily the ones with the plushest budgets or biggest tech stacks. They start with clarity and transparency as central principles — anchoring priorities, stakeholders, and use cases to build guardrails that encourage responsible use while maintaining flexibility.

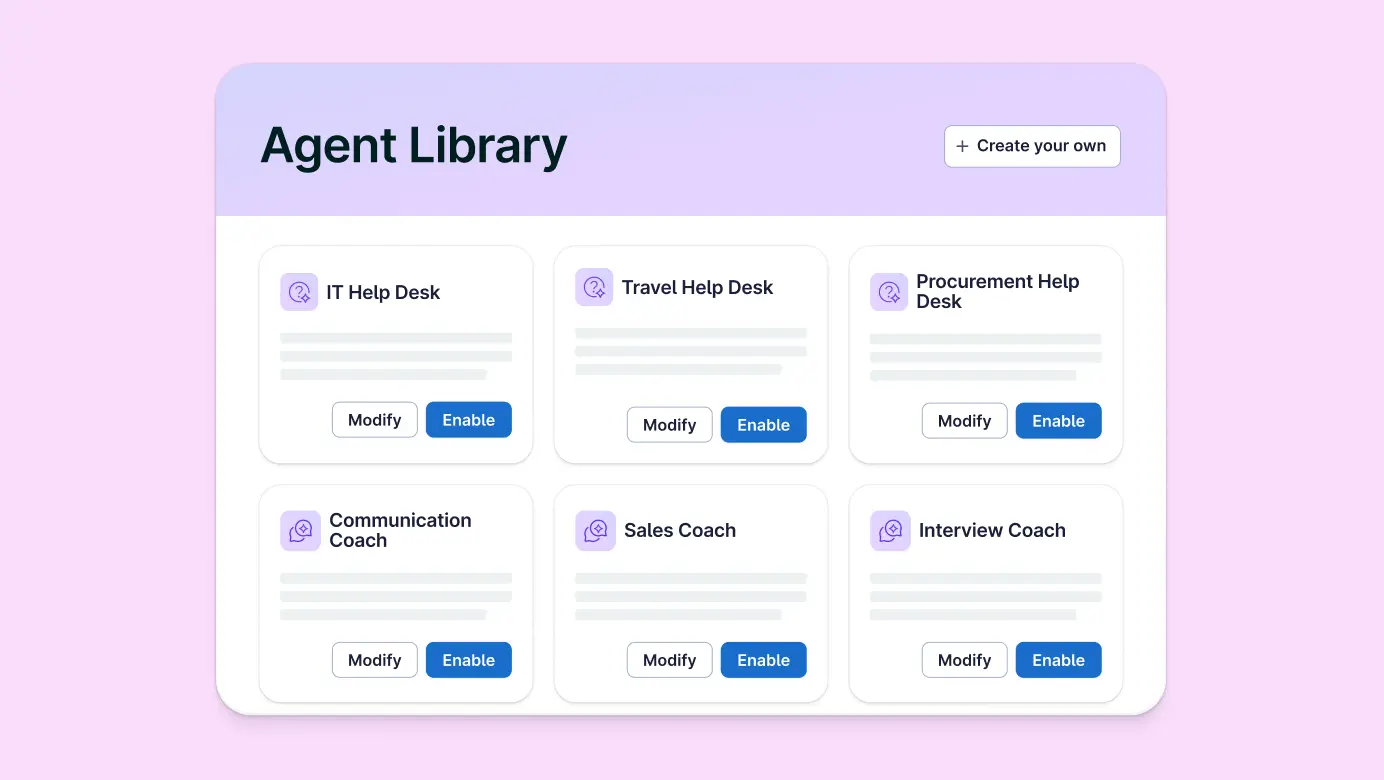

This same philosophy underpins how we build AI into our platform at Lattice. Our approach is centered on putting people first — using AI to support, not replace, human decision-making, protect privacy, and empower better outcomes. With Lattice, organizations can access:

- Enterprise-grade compliance with SOC2 Type II, CCPA, and GDPR standards, ensuring data never leaves your Lattice environment

- Customizable controls for admins to manage or disable AI assistance depending on organizational needs

- Built-in bias detection and mitigation for performance and engagement modules

- Privacy guardrails and data handling practices aligned with industry standards

Find out more about how Lattice approaches AI and schedule a personalized demo with our team.

“AI is, at best, a GPS in highly subjective situations — and just like you wouldn’t let your GPS drive you off a cliff, you don’t blindly follow AI recommendations that contradict your managerial judgment," said Alexis MacDonald, VP of People at Klue Labs Inc.

Watch the webinar on demand and leave with a clearer view of what’s worth your time when it comes to AI hype.

Download our free workbook for a six-step framework that will help you:

- Evaluate where and how to leverage AI to the greatest effect

- Build policies and best practices to standardise how the employees use AI

- Create an implementation strategy with internal stakeholders

- Measure the success of your initiatives